So, as I said, this is an early version. It uses music that I absolutely have no rights to, but I fell for this track so hard in high school that I sorta had to use it : )

One of the challenges with designing this was how to signal to the user exactly where and when they need to catch the juggling pin. Turns out, some of our depth cues in VR are totally borked. While I was playtesting this, some people were totally unable to make any sense of virtual clubs flying at their face, while others were immediately able to grock the experience.

My suspicion is that different people rely more heavily on different depth cues. Some rely more heavily on binocular vision, while others rely more on comparing objects to their contexts. I built a handful of little things to try to accommodate for as many depth cues as possible, many of which aren't present in the video above. Unfortunately, some depth cues cannot be triggered by our current batch of tech, so I feel totally fine completely ignoring them for now...

In any case, here are a few things that I found helpful in creating an experience that sets the player up for the best catching experience possible:

Use Objects Designed for Catching

My very first pass was baseball-sized balls. Baseballs, I feel, are actually great throwing objects, or even great objects to swing at, but not really great catching objects. They're hard to see, and in practice, they require special equipment to catch. Playing with baseball-sized balls wasn't fun because they were difficult to see and equally difficult to catch (even if the collider was unnaturally large)

I then went to football- / basketball-sized, which felt way better, but felt like they required too much of my body -- more than I felt was actually present inside the virtual environment. I feel objects at this scale almost require one's center of mass to be involved, like, just one step away from what's required of a medicine ball.

Of course, when you catch a football or basketball, you're usually doing it with two hands, and I think I realized I wanted a thing to catch with one hand, which led me to juggling pins.

What's really great about juggling pins is that not only do they afford one-handed catching, but they are also designed to communicate the physics of a throw, both to the performer and the audience. This ability to broadcast data through physics felt like a beautiful fit for what I was going for.

Model upon Evocative Experiences

Another plus of juggling pins is that there was a clear practice that I could draw from, and that this practice was all about the joy of catching things. It took me a bit to realize that I should model throw trajectories and spins upon what you might experience when, IRL, someone passes you a real juggling pin.

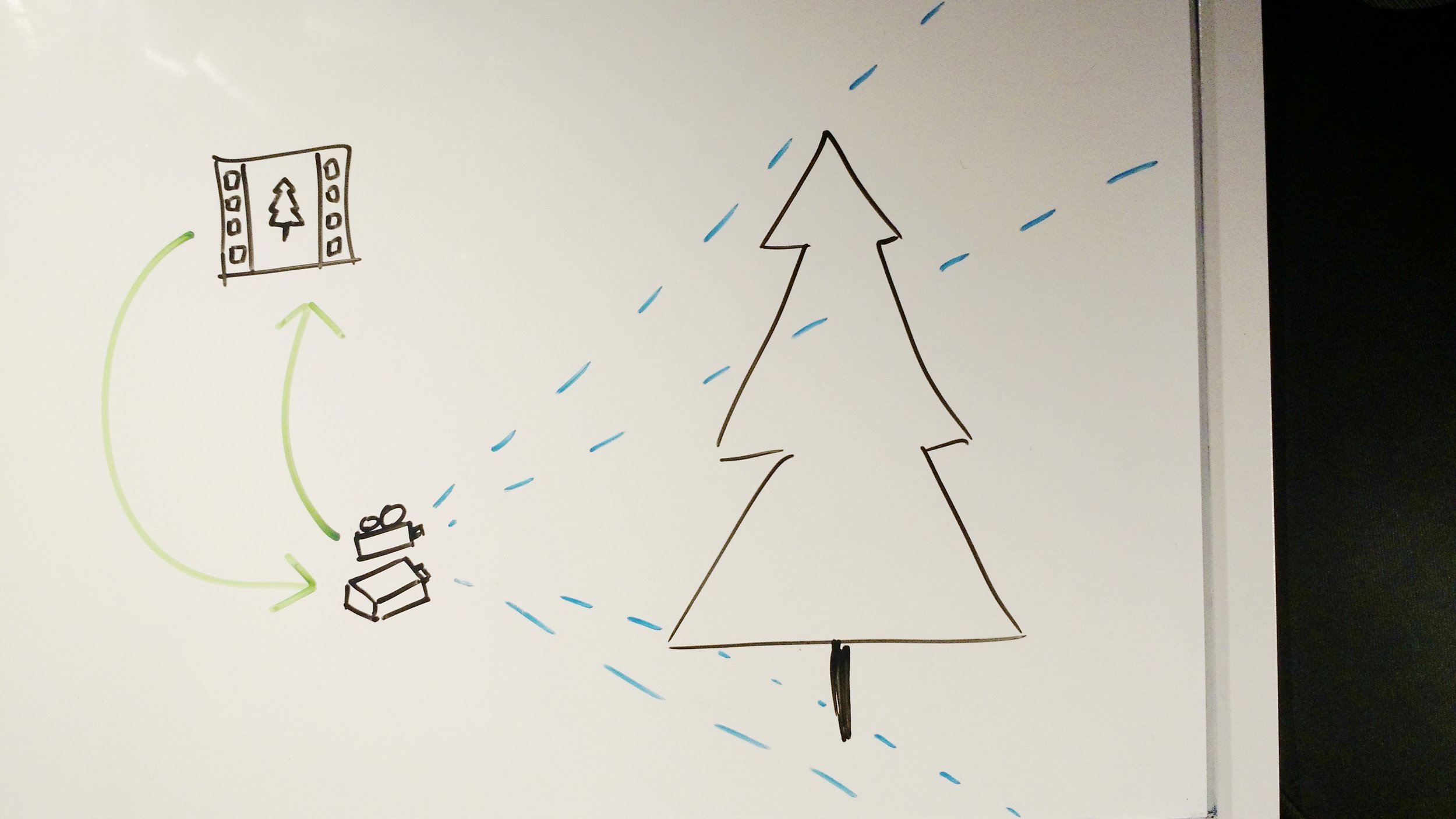

Provide Visual / Physical Context

In the very beginning (and in the POC video above), I focused exclusively on the catching mechanic and ignored the rest of the game world... figuring that would be a thing to add later when working on theme or story or something.

I soon realized after testing that the lack of visual context was making harder to catch -- a little bit for most people, but virtually impossible for others. One user reported that the flat blue skybox made them feel that everywhere they looked felt like there was a wall right in front of their face.

So I spent a bit more time working on the environment, peppering the user's periphery with other non-distracting geometry. I think it did two things: (1) proprioceptively anchored them into a room and asserted their physical presence, making it more meaningful that juggling pins were being sent their way and (2) provided objects in the distance to help them contextualize the incoming trajectories of the juggling pins, giving them more information to help them catch the pins.

Emit Light from Hands to Augment Presence

To be completely honest, I stumbled upon this trick and only have thoughts as to why this works so damned well, but don't have all the answers, so I'll just blab a bit on the topic.

So, I discovered that when comparing the Vive Controllers with these 3d objects, something just felt disconnected. Sure, conventional world lights bounce off the controller and digital 3d objects in the same way, but somehow it's still really easy for my brain to consider the controller and the digital 3d objects as belonging to separate worlds.

IOW, the controller is human-driven, is like a computer mouse, while the digital object somehow belongs to a computer. I think my brain just compartmentalizes them separately, and it just doesn't accommodate as graceful of a transition between the two states as I would like.

HOWEVER!

If you place a point light on the user's hand... uhm, it feels totally magical. Like, suddenly, these digital objects are painfully compelling. These objects begin to feel more like extensions of the body and less like pure, flat data.

I suspect that hand-driven dynamic lighting triggers something in our perceptual systems that help us model 3d spaces in relation to our bodies. The crucial part of this is the implication of the body, I believe -- because things only feel physically available once the user sees them in relation to their body, not in relation to a 3d model.

... anyhow, this is something I'll be turning over in my head a while...